Suppose a shopkeeper approaches you with a problem.

Their business is growing every year. All the top line numbers are increasing—sales are up, prices have been adjusted to match inflation, and they’ve thoughtfully expanded their product offerings. Yet somehow, the business seems to be making less in profits.

The first question you would ask is: what are the costs?

Without a clear picture of costs, it would be impossible to reasonably suggest how to improve the business. This is a current conundrum for science.

There is a widening discussion among scientists, administrators, and policymakers about how to improve the institution of science. The question has inspired conferences, new organizational experiments, and an emerging discipline—metascience—which is turning the scientific method back on itself. There is real, pragmatic pressure to do science better.

The value of scientific research is notoriously hard to measure. We know how much we're spending on science. For example, the U.S. federal government spends roughly $100 billion on basic research every year. We also know what’s emerging from that investment: the papers, patents, and trained scientists. But it’s still difficult to accurately measure those returns. The best attempts are relative. For example, Patrick Collison and Michael Nielsen argued in The Atlantic that the returns to scientific investment seem to be slowing.

As with the shopkeeper, closer scrutiny of scientific costs could yield opportunities for improvement.

For the past few decades, the most visible discussion around scientific costs has been a debate around administrative overhead, when an equally important question has been hiding in plain sight: why are scientific tools so expensive?

Direct vs. Indirect

For any individual scientific grant, the budget is divided into two categories: direct and indirect costs. The direct costs include researcher salaries and equipment. The indirect costs cover overhead: the university and organizational administration, facilities, and support.

For the past few decades, the debate has been about rising indirect costs. Philanthropic funders began to push back against university overheads that had drifted ever higher. It had become common—and still is—to see more than 50% indirect rates on top of the direct costs listed in the grant. Some private funding organizations, like the Bill & Melinda Gates Foundation, pushed back and instituted policies to limit those amounts. Operating non-profits countered with a campaign—"The Overhead Myth"—attempting to explain the value and importance of good non-profit management.

The administrative cost issue is thorny, and certainly deeper and more complex than I can convey here. But the debate has overshadowed a more promising frontier for inquiry: tools.

Science & Manufacturing

Direct costs dictate research directions.

For personnel costs, the influence is straightforward. Grant availability can push more principal investigators to undertake a problem or enable the hiring of additional post-doctoral researchers in a lab.

The costs of tools are just as important, but much more difficult to pin down. Scientific equipment is an essential part of discovery. New technologies are enablers of new ideas and perspectives, and vice versa. Throughout history and across disciplines—from telescopes to microscopes, synchronized clocks to automated genomic sequencers—technology sets the pace for knowledge and insight. It's personal for scientists, too. Access to cutting-edge tools can make or break careers by enabling priority in experimentation and, in turn, earlier publication.

As with personnel costs, tool costs dictate research directions, whether that’s determining the size of a telescope to build or deciding what kind of mice to use. Cost is the driving factor in deciding which equipment a lab will buy, share, or just leave on their wishlist. Relatedly, costs affect the pace of discovery. For example, the dropping costs of genetic sequencing have created an explosion of new research. When costs go down, we see a direct correlation with scientific output as well as industrial and commercial applications.

Analyzing the cost of tools is harder than just looking up prices on Amazon. The metascientists have approached the issue, but haven’t directly engaged.

Kanjun Qui and Michael Nielsen laid out a metascience vision and perspective for how to improve the social processes of science. It took them two years of research to capture their important argument: we’ve only explored a small fraction of the possible arrangements for doing science. Even amongst their expansive scientific world-building, tools only got a footnote:

"It's striking that the builder of the first telescope is not remembered by most scientists, but Galileo is. The usual view is: Galileo made the scientific discoveries, but the toolbuilder did not. But they did enable discovery. This is an early example of a pattern that persists to this day. It's beyond the scope of this essay to delve deeper, but fascinating to think upon."

Paula Stephen, a leading science economist and author of the book How Economics Shapes Science, came to a similar cliff. Stephan dedicates an entire chapter to tools and materials, but there's a missing analysis of why they cost so much. Stephen points out that "despite the important role that equipment plays in research, little is known about the degree of competition in the market for equipment."

That sums up the scientific attitude towards tool-building: forgotten footnotes.

I have a pet theory about why scientific instruments remain expensive and exquisite. And why this arrangement has settled into a "don't ask too many questions" equilibrium. It's twofold.

First, scientists never have to care. Scientists write grants and simply include the cost of equipment in the budget. The grant is met by review committees with a binary "yes" or "no" answer. They get no points for trying to reduce costs. The lack of downward cost pressure on behalf of the scientists – the end users, in this case – means manufacturers don't try to compete on price.

The second half of my theory is more subtle: a lack of exposure. Most scientists aren't exposed to the realities and possibilities of manufacturing. It's generally not part of the curriculum in higher education and it's difficult knowledge to acquire outside of direct experience or vocational training. The flip side is also true. The folks who understand the tools of mass production and the realities of global supply chains rarely consider the plight of the scientist. And when they do consider, they don't like what they find: small markets, convoluted purchasing processes, and customers who demand almost constant modification and customization.

It's worth clarifying that many scientists do build tools. In fact, some of the most incredible machines on (and off) earth are built by scientists: the James Webb Telescope, CERN, and the IceCube Neutrino Observatory. But rarely do scientists build tools using modern manufacturing techniques that would allow them to reap the economic benefits of scale. In some cases, the reasons are clear. It's hard to justify needing more than one neutrino observatory. I suppose humanity could aspire to dozens of Thirty Meter Telescopes, but through sharing and cooperation amongst scientific communities, it’s reasonable to hope for just one (or a few). In these cases, scientists coordinate their research interests to design, fund, and build the tools that will advance the entire field.1

Even on smaller scales, scientists are constantly rigging their experiments with makeshift equipment given tight budgets and field realities. If they have a specific question they want to ask, they will go to extreme lengths to hack together a device to capture the right experimental data. I've seen this firsthand on countless occasions in oceanographic research settings. These impressive MacGyver-like machines and setups prove what’s possible.

However, there’s another level (or several). Making one tool is impressive, but designing and manufacturing thousands is an altogether different challenge. There’s a reason that most projects don’t take the leap. For any individual scientist, their career progression depends on their publication record. The investment of time and money into manufacturing doesn’t make sense, even though taking the extra step—making a given tool available for others—helps raise the tide for everyone.

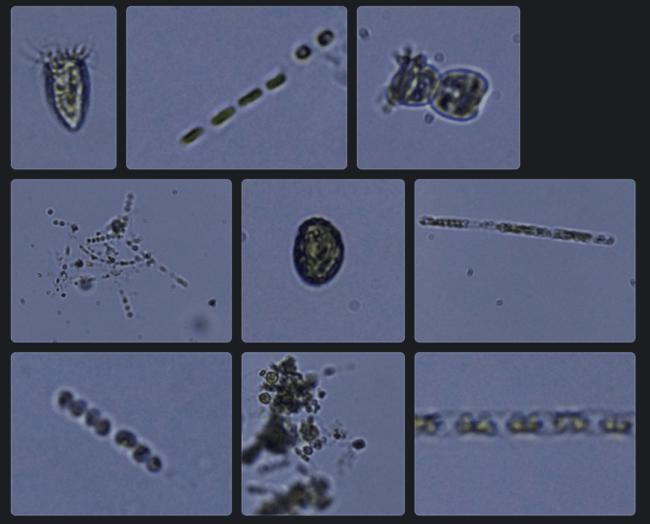

In the microbial sampling expedition kit described above, Adi Gajigan is using the PlanktoScope tool developed by the Prakash Lab—a Raspberry Pi-based device developed to automate plankton sampling and identification. Manu Prakash and his team are among a small group of scientists who understand the leverage of affordable tools. They have developed and implemented a philosophy of “frugal science” which aims for radical accessibility of both the tools of science and the camaraderie of scientific exploration. The Foldscope, an origami paper microscope, is perhaps their most famous invention.

I lived through a similar experience. We started OpenROV in 2011 as two friends and less than a few thousand dollars between the both of us. Our goal was a small underwater remotely operated vehicle (ROV) that we could send to depths of 100m. At the time, a capable device with the specs we wanted cost north of $20,000. We started where we could: off-the-shelf parts and access to the tools at our local makerspace. Our timing was good, as new single-board microcontrollers and microcomputers like the Beaglebone and Raspberry Pi were just becoming available.

Fast forward through a Kickstarter project, a startup company, and a vibrant amateur community of builders; the world is much different. Multiple companies and projects started and grew off that initial momentum. The result is an expanded (and more affordable) marine robotics industry. Today you can find capable devices that meet our initial specs on Amazon from a number of companies, and many for less than $1,000. Scientists have used this new class of vehicles to discover new deep-water kelp forests, monitor invasive tunicates, and beyond. From the perspective of our humble garage beginnings, this is mission accomplished.

We were not alone in the marine robotics effort, or in the broader effort to democratize the tools of science. There are now hundreds of other projects who have connected the dots between low-cost components, the growing capabilities of the digital manufacturing suite (3D printers, laser-cutters, etc), and a desire for some unaffordable piece of scientific equipment. Ariel Waldman wrote a report for the White House Office of Science and Technology about the nascent trend of building low-cost scientific tools in 2013. Ten years later, there are battle-tested entrepreneurs (like us) who've weathered the gauntlet of manufacturing as well as a vibrant community of new builders who are prototyping the next wave of devices and mechanisms.

There's currently no database of these efforts, but they span the sciences.2 The idea has grown tremendously in terms of participation and technical capability since Waldman's initial report. The Gathering of Open Science Hardware (GOSH) has been a nexus point for this global community, hosting both events and virtual discourse. GOSH was a hybrid outgrowth of two other movements: open hardware and open science.

The open-source hardware strategy—releasing plans online to allow others to reproduce and remix a given electronic or mechanical design – was developed more than a decade ago by hardware hackers who wanted to recreate the culture and momentum of open-source software in the physical world. Real-world friction and manufacturing realities have held the movement back from catching up to their digital counterparts, but there are success stories like the Arduino microcontroller which has accelerated embedded systems prototypes around the world.

Open science has been a decades-long push by scientific activists towards making the results and process of science more transparent and available for public review. The open science community has made steady progress towards open-access publications, the inclusion of source data, and, most recently, the adoption of registered reports.

GOSH adopted both the tactics and goals of its parent movements. They encourage the sharing and easy replication of equipment designs and lobby for institutional adoption of their methods. From the GOSH perspective, truly open science demands open hardware – replication at every step of an experiment, right down to the tooling. So far, open tools have meant cheaper tools because they’ve been made with mostly off-the-shelf components and digitally fabricated designs. The popularity of the Openflexure microscope is an example of open science hardware gone right.

Frugal Science, GOSH, and their corresponding projects are often inspired by idealism, but they’re sustained through economics. They are disruptive innovations in the true Christensen sense of the term: an initially-harder-to-use product that finds a niche community of users, steadily increasing in competitive performance with their commercial counterparts. We should cheer for continued momentum. In fact, we should do more than cheer: we should incentivize.

Bridging the Gap

We know the economics of science can change with big money efforts. The Human Genome Project (HGP) kicked off in 1990 with the goal of sequencing the human genome. The program did much more than its stated goal of creating a map of the first human reference genome.

The budget—$3.8 billion over the 13-year length of the program—was a massive public investment in the biological sciences. The HGP kickstarted a revolution in technology development that continues to this day. Initially undertaken using Sanger sequencing methods, new techniques, and strategies were employed to speed up the process. The Celera team used a shotgun sequencing approach. Like every human achievement, doing something first proves it can be done, but the following efforts show how it can be done better.

A variety of new approaches came to market soon after the HGP, now known collectively as Next Generation Sequencing (NGS) technologies. A race between technologies and performance has pushed the costs of sequencing down precipitously. The latest announcement from Illumina promises a $200 price to read a person's entire genetic code, with their eventual target of $100 within sight.

The financial outcomes of the HGP investment are clear and phenomenal. It kick-started the genomics industry. As early as 2010, the program had reportedly created nearly $800 billion in economic output, and, in 2010 alone, the federal tax revenues were estimated to be roughly equivalent to the entire cost of the program.

The effects of the HGP have been profound for science, medicine, and industry – from providing insights on disease treatments to unlocking new understanding about the natural world. The program made civilizational progress. Despite this success, there are still too few people working on the frontiers of scientific tooling. We could be making this kind of progress in many directions and at multiple scales. And we have the meta tools to accelerate the trend.

One method is Focused Research Organizations (FROs), a new type of non-profit research organization dedicated to advancing specific and time-bound challenges in a given scientific field. You can imagine them as miniature HGPs – focused teams and efforts with budgets of tens of millions of dollars instead of billions. The idea was proposed by Adam Marblestone and Sam Rodriques in 2020 and has gained significant momentum in a short period of time. Since their white paper was published, numerous FROs have spun up to address various scientific bottlenecks: new model organisms, brain mapping, and many more to come.

More FROs are a welcome development, as are the open-source prototypes of scientific equipment. Ultimately, the long-term impact of these tools is dependent on economic tailwinds to sustain their momentum. And, even there, we have levers to pull.

Making Scientific Markets

There is an emerging subset of applied economics called "market shaping" which uses new mechanisms to solve problems and fix inefficiencies. The most popular idea has been Advanced Market Commitments, a philanthropic purchase order designed to incentivize producers to deliver at price points that can reach underserved markets. The idea was proposed by the economist Michael Kremer and made famous by the Bill and Melinda Gates Foundation’s billion-dollar bet on GAVI. More recently, a version of the idea was used to speed up the development and distribution of COVID-19 vaccines.

I've made the argument that AMCs should be used to spur more affordable scientific equipment:

The model stands in stark contrast to the existing tools of philanthropy, which have traditionally operated by distributing grants, awarding prizes, and recently “impact” investing into purpose-aligned companies. The AMC spurs action by imitating one of the most powerful actors in the capitalist system: the customer. The bigger the purchase order, the faster companies will line up to serve.

It should be emphasized that AMCs are not silver bullets. They were only part of the COVID-19 playbook. They work as a powerful force to ensure production is stable, distribution is equitable, and companies have a financial incentive to produce. But they are only effective as part of a bigger strategy.

Another part of that scientific tool strategy could be Equipment Supply Shocks:

An Equipment Supply Shock (ESS) is a targeted increase in the availability of (normally) expensive or hard-to-access tools with the anticipation that new users will discover productive new uses.

I've seen this work with scientific tools before, with us and others. We used the technique to support hundreds of early-career scientists and conservation teams through the donation of our underwater drones. I’m still getting messages about new discoveries and scientific careers that were boosted by that donation. The Foldscope team did something similar: giving out thousands of their origami microscopes to curious minds around the world.3

Market shaping takes the systemic problems head-on. The mechanisms work specifically to bridge the gaps between research and development—between prototype and product—by smoothing out the cost curves of mass production. That doesn't mean that more traditional kinds of support wouldn't be effective. Grants to develop new tools would be most welcome. The only focused effort I know of is the Tool Foundry accelerator funded by Schmidt Futures and the Moore Foundation. More kickstarts are needed.

Awareness is a big part of the equation. The GOSH community in particular has built a strong foundation of knowledge sharing and mutual support. So far, the open science hardware trend has mostly been an amateur technology effort, but it's worth expanding.

More scientists, policymakers, and funders should care about the intersection of science and manufacturing. We should hope for better, cheaper, and more accessible scientific tools. The examples have already helped to transform disciplines—even society—in surprising and beneficial ways.

The first question we should be asking to make science better: what are the costs?

Scientific coordination at this scale is messy and inspiring. I've attended the decadal Ocean Obs Conference and seen it firsthand. Interested scientists gather to chart out a decades-long research agenda, including proposing and developing global sensing infrastructure that they all (mostly) agree will advance the field furthest, even though some won’t be working long enough to reap the benefits. I’m now convinced: our best work emerges when we design for following generations.

Bri Johns from GOSH is spearheding a catalog effort, per this thread.

What are they exploring, you ask? Behold the Microcosmos.

I feel like a fanboy, but I’m enjoying this newsletter so much! I want a book’s worth of content

Great article! It's unfortunate this isn't talked about more: "More scientists, policymakers, and funders should care about the intersection of science and manufacturing. We should hope for better, cheaper, and more accessible scientific tools."